Every time you purchase online or download cloud-based apps, there is a force invisible that powers your experience, which is called data centers. These facilities, which are brimming with infrastructure and servers, serve as the foundation of cloud computing, which allows the digital services that we rely on every day. The market for global data centers, estimated at $217 billion by 2023, is expected to expand by a 10.5 percent CAGR until 2030, driven by the exploding demand for cloud-based services. However, data centers are invisible heroes, providing stability, scalability and security for both businesses as well as consumers.

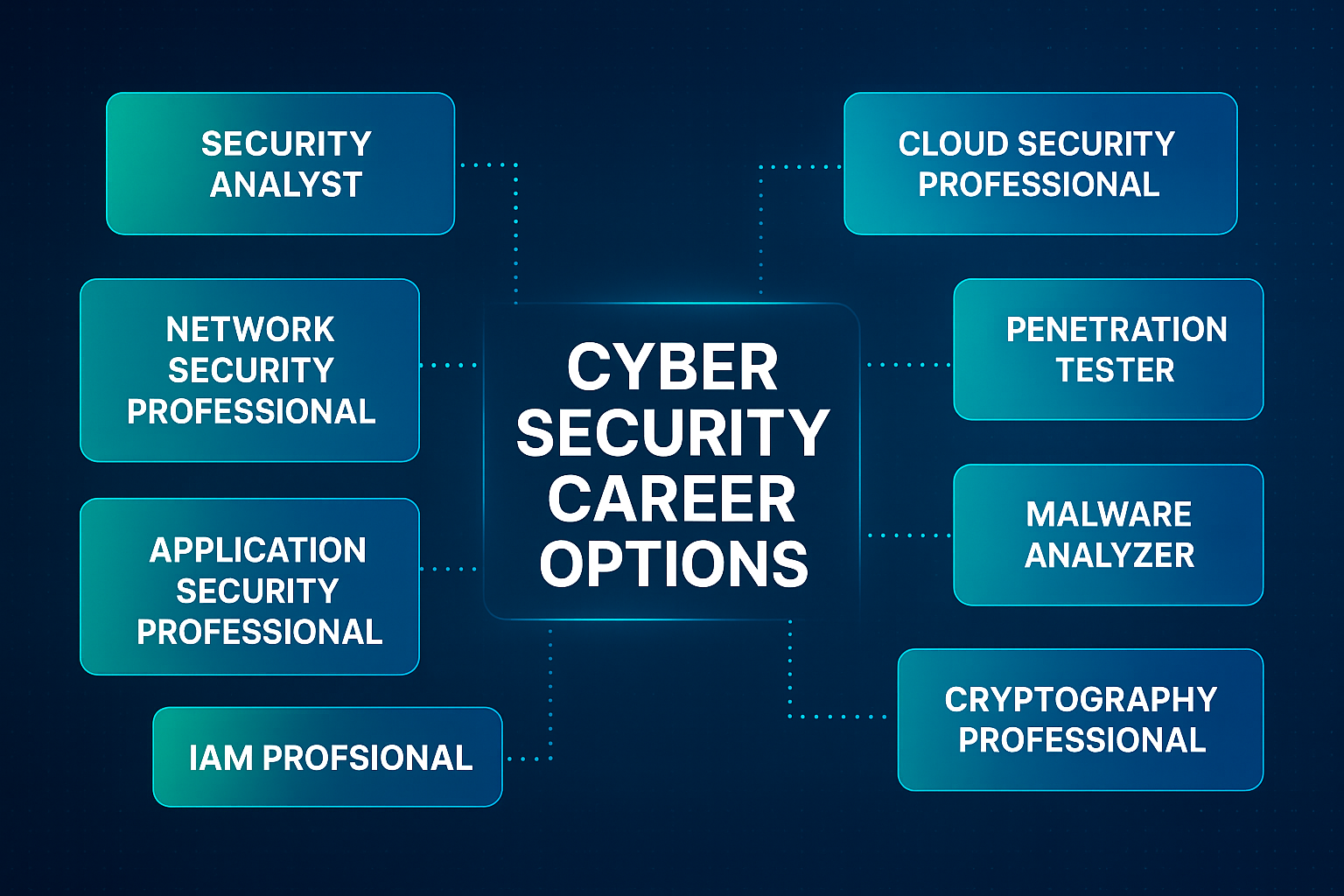

Dlan.ai blog unpacks the nuts and bolts of cybersecurity to keep your digital world safe! If you’ve been exploring ways to protect your systems, you’ve likely come across terms like Vulnerability Assessment (VA), Penetration Testing (PT), and Red Teaming. They all sound like heavy hitters in the fight against cyberattacks, but what sets them apart? Are they just different flavors of the same thing, or do they serve unique purposes?

In today’s digital world, where hackers seem to lurk around every corner, keeping your systems safe isn’t just smart—it’s essential. We’ve all heard those scary stories about massive data breaches that cost companies millions and ruin reputations overnight. But what if I told you there are proactive ways to stay one step ahead? That’s where Vulnerability Assessment (VA) and Penetration Testing (PT) come into play. These aren’t just buzzwords; they’re powerful tools that help spot weaknesses before the bad guys do.

In today’s digital landscape, cybersecurity is more critical than ever. With cyber threats evolving rapidly, businesses and individuals alike need advanced tools to identify vulnerabilities and secure their systems.

According to recent reports, the global cost of cybercrime is projected to reach $10.5 trillion by 2025, with 73% of successful breaches in 2024 attributed to vulnerable web applications. Regular testing is no longer optional—it’s a necessity.

Running a small business in 2025 is like running a busy highway—exciting, but one wrong move can lead to trouble. With cyberattacks on the rise, keeping your digital assets safe is no longer optional.

Small businesses are prime targets for hackers, with 82% of ransomware attacks hitting them in 2024 (Network Assured). That’s where Vulnerability Assessment (VA) and Penetration Testing (PT) services come in, acting like a GPS to spot risks and guide you to safety. At Dlan.ai, we’re here to help you protect your business with innovative, affordable cybersecurity solutions.

With cyberattacks making headlines daily, businesses must stay one step ahead to protect their digital assets. Two critical cybersecurity practices, Vulnerability Assessment (VA) and Penetration Testing (PT), play unique roles in securing your systems. While both aim to bolster defenses, they differ significantly in their approach and impact. This guide dives into the top five differences between VA and PT, crafted for business owners, IT teams, and anyone looking to understand how these tools can protect their organization. This article will help you decide which strategy fits your needs.

In today’s digital world, where cyber threats loom large, businesses and individuals alike are increasingly concerned about protecting their sensitive data. Cybersecurity terms such as Vulnerability Assessment (VA) and Penetration Testing (PT) frequently appear in discussions about securing systems, but what do they mean? If you’re new to cybersecurity or looking to strengthen your organization’s defenses, this beginner-friendly guide will break down VA and PT in simple terms. We’ll explore their differences, why they matter, and how they can protect your business from the growing wave of cyber threats. Additionally, we’ll include the latest data breach statistics to underscore the importance of robust cybersecurity practices.